Researchers and foundation model companies are releasing new models at breakneck speed. In recent weeks, we have seen the release of Llama 3 405B, GPT-4o-mini, and many others. There's a clear trend towards smaller, cheaper, but still smart-enough-to-be-useful models.

At the same time, inference costs continue to go down, and developers can now access GPT-4o mini level models at $0.15 per million input tokens and $0.60 per million output tokens. That’s insanely cheap. It is fair to assume that costs will continue to drop as researchers optimize model training and inference techniques.

So, we have an abundance of models (closed and open source) and prices that are doomed to continue to drop. It means models are now a commodity. As a consequence, the value will be created elsewhere:

-

At the infrastructure level, big cloud providers and GPU builders will obviously win big. As the saying goes, “During a gold rush, sell shovels.” No one is doing that better now than NVIDIA, Azure, AWS, and, to a lesser extent, GCP.

-

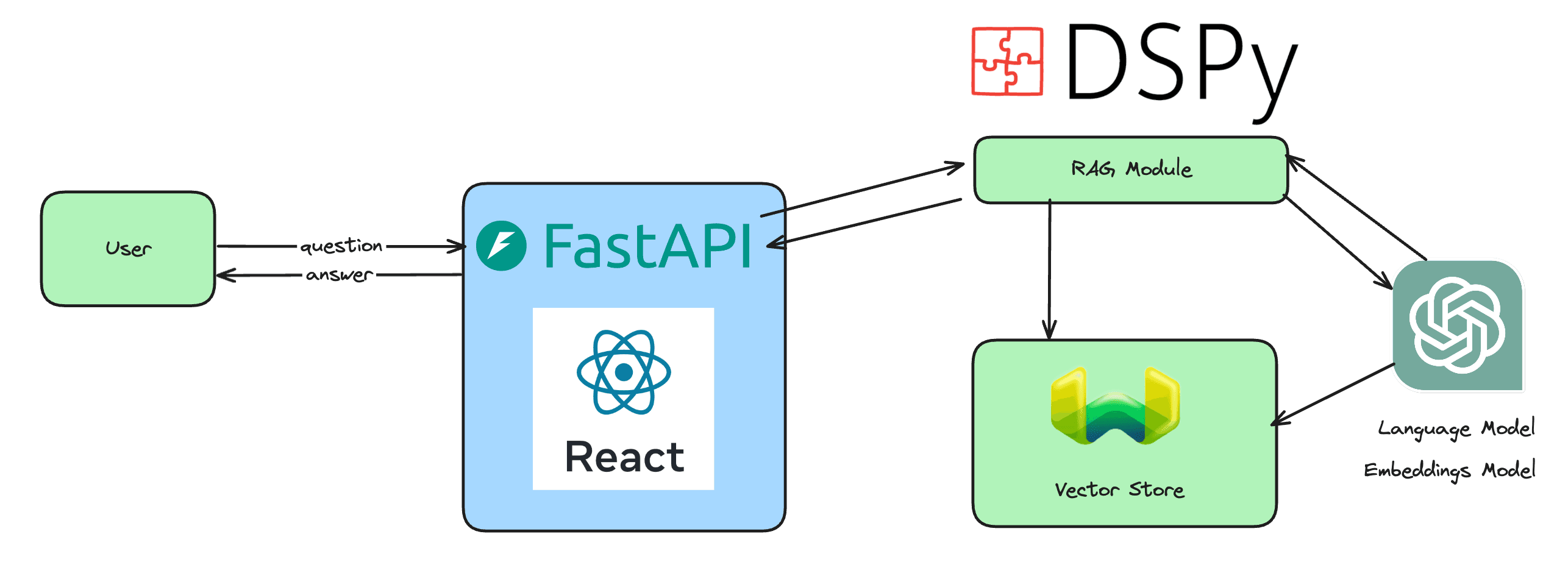

At the application level, frameworks that help developers ship AI demos and products quickly are obviously gaining popularity, traction, and funding, like LangChain and LlamaIndex, even if it is unclear how they will manage to make money. Flask, FastAPI, React, or Django are not money machines, right? Products that help developers monitor AI apps are also getting some funding, but the space seems to have too many offerings now, and some developers don’t yet see the value in using them instead of building a simple custom solution. Also, using AI observability tools brings new challenges. For LLMs, it means giving access to the input/output pairs of users to a third-party API, and that means less privacy for users.

AI SaaS was the rage one year ago, at the beginning of the hype when ChatGPT rocked our world. But it seems like most use cases have already been explored, and the quick wins that could be made because of the hype have already been made (writing/summarizing tools, chat with document tools, embedded LLM-based chatbots, AI for interior design, AI for professional photoshoots, AI roleplay, etc.). For each of these use cases, there are at least a dozen competitors. It is difficult to build a profitable SaaS of that kind now. The space is saturated, and users no longer have the curiosity they had at the beginning of the hype cycle, curiosity that meant they would shop around and try different tools. People are now more or less settled on their workflows and less easily convinced by the latest hyped product. And for most use cases, companies prefer to develop them internally or hire consultants to help them do that since it is not that complicated to build a so-called LLM-wrapper. So, there are huge opportunities for consultants, and less for indie hackers and SaaS founders.

A good positioning could be to build and sell tools that help consultants better serve companies in developing AI products for their custom use cases. The big question is, how do you make those tools more interesting to use than open-source ones?

Foundation models becoming a commodity, with almost weekly new releases and costs continually going down, has significant implications for the AI space as a whole. Here are some potential impacts:

-

Democratization of AI: Lower costs and the abundance of models make advanced AI technologies accessible to a wider range of developers, startups, and even hobbyists.

-

Focus on Specialization and Customization: As the core models become commodities, the value shifts towards specialized applications that cater to niche markets or specific business needs. Companies will invest in customizing these models to differentiate their offerings.

-

Increased Importance of Data: The quality and uniqueness of data will become even more crucial. Companies with access to unique, high-quality datasets can train foundation models to perform better on specialized tasks, creating a competitive edge.

-

Consolidation of Power among Infrastructure Providers: As more entities look to deploy AI models, the big cloud providers and infrastructure companies stand to gain significantly. Their platforms become the go-to places for hosting and running these models, leading to increased market concentration.

-

Shift in Skill Requirements: The focus on AI will shift the demand for skills, with a growing need for experts in AI ethics, data governance, model customization, and industry-specific knowledge.

-

Innovation in AI Management Tools: There will be a surge in tools and platforms designed to manage, monitor, and optimize the deployment of AI models. This includes AI observability, security, and compliance tools.