I recently got access to OpenAI's o1 preview, the most capable model from OpenAI when it comes to reasoning. I got access as part of the tier 4 of developers who use the API, meaning the second tier according to OpenAI's ranking system. It is true that I have been using that API quite a lot in the past 2 years…

Immediately after getting access, I knew I had to run my now infamous chess test for tactical intelligence. My idea is that everyone should start to freek out when an AI system not trained specifically for chess can beat a system built specifically to excel at chess (like the Stockfish engine). Why? For the simple reason that would mean a system with general intelligence, or at least a high level of general intelligence is available.

For those of you who read my articles frequently, you know that I ran a similar experiment for GPT-3.5 and GPT-4. And if you want to check the results I had at that time, check the articles below:

Can GPT-4 Beat the Stockfish Engine? Let's Use Langchain to Find Out

Building a Chess Playing Agent Using DSpy

Now let me explain what I did. I first tried using the old code I had using instructor, but it didn't work out of the box. After doing some troubleshooting, I realized it is because o1 preview do no support system messages, and tool use. And under the hood, instructor seems to be using tool use in order to generate structured outputs. At least that was immediate hypotheses. And after checking instructor's source code, my hypothesis was confirmed.

A little digression here. I really liked reading the source code of the instructor's library. I found it pretty neat. Also, Instructor is one of the rare Python libraries in the AI space with little to no unnecessary abstractions. Frankly, I wonder why Instructor is even less popular than some other bloated library that I won't name. I guess developers love unnecessary complexity, especially when it comes under the disguise of some advanced functionality you absolutely need to master.

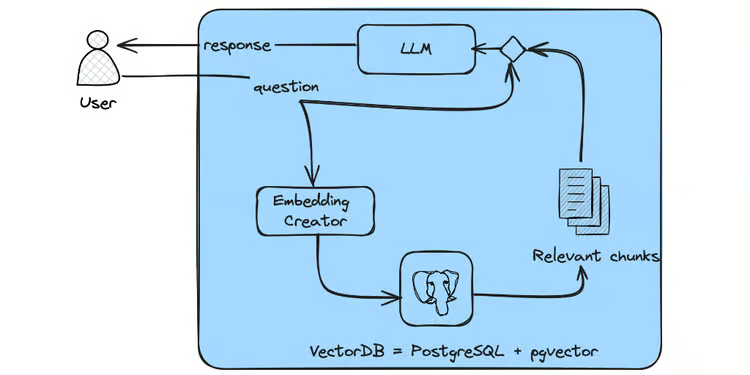

Anyway, after doing that initial troubleshooting, I came up with an imperfect, yet working solution to let o1 preview play against the Stockfish engine. Remember, the key here is structured outputs generation. Why? Because that's how I get the move to play from the LLM (in algebraic notation). My solution is simple; I first use one call to o1 preview to generate the best move to play given the state of the board. That answer from o1 preview is not structured, so I use another model, namely GPT-4o, to parse the content of o1 preview's response into a structured output that I can then use to play o1 preview's recommended move.

Simple right? Just a bit costly token-wise. For reference each game between o1 preview and Stockfish cost me approximately $7.

Here is the implementation. As stated previously, I used the Instructor library, OpenAI's models, and a binary of the Stockfish engine that I manipulate via the Python chess library. I first define the NextMove class, which is a child of the BaseModel class from Pydantic. This is the class that will be used to force the output of my GPT-4o calls to respect a specific format (move and reasoning).

I also initialize the Stockfish engine I am going to use.

import instructor

from openai import OpenAI

from dotenv import load_dotenv

from pydantic import BaseModel, Field

import chess

import chess.engine

from dataclasses import dataclass

load_dotenv()

llm = instructor.from_openai(OpenAI())

raw_llm = OpenAI()

class NextMove(BaseModel):

move: str = Field(..., description="The best move to win the chess game. It should be in standard algebraic notation.")

reasoning: str = Field(..., description="Reasoning explaining why the move is the best one.")

# stockfish

engine = chess.engine.SimpleEngine.popen_uci("/opt/homebrew/Cellar/stockfish/16/bin/stockfish")Secondly, I define my ChessAgent as a dataclass. The ChessAgent will primarily store the board state, a list of legal moves (because otherwise LLMs tend to hallucinate moves that are not legal), the history of moves played so far, the feedback from previous generations (useful when the LLM generates a move that is not legal for example), and finally the next move, a placeholder that will be filled once o1 preview's recommended move has been generated and parsed.

The ChessAgent also has a post_init method. It is a method that is executed directly after the init. In this case, I used that method to actually implement the process of generating the next move. The move is first generated by o1 preview, then parsed into a structured response by GPT-4o.

@dataclass

class ChessAgent:

board_state: str

legal_moves: str

history: str

feedback: str = None

# next move is the output field it will be null initially

next_move: NextMove = None

def __post_init__(self):

# get the next move from the model

self.next_move = raw_llm.chat.completions.create(

model='o1-preview',

messages=[

{"role": "user", "content": f"You are chess grand master. Given the current state of the chess board: {self.board_state}, legal moves: {self.legal_moves}, history of moves so far: {self.history}, and feedback on the previous move generated: {self.feedback}, generate the next move. The next move should be in standard algebraic notation like e2e4, e7e5, c6d4 etc."},

]

)

self.next_move = llm.chat.completions.create(

model='gpt-4o',

response_model=NextMove,

messages=[

{"role": "user", "content": f"Parse the next move from this answer: {self.next_move} The next move should be in standard algebraic notation like e2e4, e7e5, c6d4 etc."},

]

)Finally, I have the play_game() function that initializes and runs the chess game between the Stockfish engine and o1 preview until the game is over by checkmate, stalemate, insufficient material, or other reasons.

def play_game():

moves = []

board = chess.Board()

def get_agent_move(board):

feedback = ""

while True:

print(feedback)

next_move = ChessAgent(

board_state=str(board),

legal_moves=str(board.legal_moves),

history=str(moves),

feedback=feedback

).next_move.move

try:

move = board.parse_san(next_move)

print(move)

if move in board.legal_moves:

return move

else:

print(board.legal_moves)

feedback = f"Agent's generated move {next_move} is not a legal move. Should be one of {str(board.legal_moves)}."

except Exception as e:

feedback = f"Failed to parse this agent's {next_move}. Error: {e}. Should be one of {str(board.legal_moves)}."

while not board.is_game_over():

if board.turn:

result = engine.play(board, chess.engine.Limit(time=0.1))

board.push(result.move)

moves.append(result.move.uci())

else:

move = get_agent_move(board)

board.push(move)

moves.append(move.uci())

print(board)

print("\n\n")

winner = ""

if board.is_checkmate():

if board.turn:

winner = "Black"

else:

winner = "White"

elif board.is_stalemate() or board.is_insufficient_material() or board.is_seventyfive_moves() or board.is_fivefold_repetition() or board.is_variant_draw():

winner = "Draw"

if winner == "Black":

return "Agent wins by checkmate!"

elif winner == "White":

return "Stockfish wins by checkmate!"

else:

return "The game is a draw!"

n_games = 5

results = {

"Agent wins": 0,

"Stockfish wins": 0,

"Draw": 0

}

for i in range(n_games):

print(f"Starting game {i+1}...")

result = play_game()

print(result)

if "Agent wins" in result:

results["Agent wins"] += 1

elif "Stockfish wins" in result:

results["Stockfish wins"] += 1

else:

results["Draw"] += 1

print(f"Game {i+1} completed.\n\n")

print(f"Final results after {n_games} games:")

print(f"Agent wins: {results['Agent wins']}")

print(f"Stockfish wins: {results['Stockfish wins']}")

print(f"Draws: {results['Draw']}")I ran this game several times, and guess what? o1 preview, the amazing model that some people call the first LRM (Large Reasoning Model) never came close to beating the Stockfish engine. And even more damning, it didn't exhibit any form of tactical intelligence, instead making quite obvious tactical mistakes.

Check an analysis of one of the games:

In this chess game, White, likely controlled by Stockfish, achieved a victory by checkmate. Here's a breakdown of key moments in the game:

Opening Phase:

White played 1. e4, aiming for control of the center.

Black responded with 1… e5, mirroring White's central control.

White's knight development to f3 followed, attacking the e5 pawn, and Black responded by developing the knight to c6.

Mid-Game:

The game transitioned quickly into a tactical battle, with both players focusing on development and minor piece activity.

A central exchange occurred after White pushed the pawn to e4 and Black's pawn captured on d4.

White gained a significant edge after several exchanges of knights, leaving Black's defenses weaker and pieces less coordinated.

White was able to advance pieces actively, especially with the queen on d8 pressuring Black.

Critical Error by Black:

Black made a tactical error when trying to exchange pieces and left the king exposed by moving e8-g8 (kingside rook and bishop positioning). This allowed White's queen to infiltrate the position.

A rook and queen combination for White exploited the open lines and diagonals around the Black king.

Checkmate:

White delivered checkmate with a well-coordinated attack involving the queen, supported by the knight and rook. Black was unable to provide enough defense, and White checkmated with the queen on f8.

This game demonstrated White's strong positional play, tactical awareness, and efficient piece coordination, while Black struggled after the initial opening phase, leading to a swift defeat.

A swift defeat …

What can we conclude from this? While impressive to some extent, o1 preview still fails at exhibiting robust planning and reasoning. It lacks skills essential to excel at chess without specific training on chess. Use o1 preview with caution, especially for novel situations, or situations that require complex reasoning or planning.