I decided to set up a chess tournament between LLMs to gauge their relative strategic thinking and tactical intelligence.

Chess is commonly thought of as a game that demands both strategic planning and precise tactical calculations. It seemed like a good idea to observe how these models perform in a structured environment, particularly since LLMs have no specific training to excel at chess.

This setup allowed me to assess the emergent behaviors of LLMs—capabilities that arise without direct training for the specific task at hand. Can LLMs excel in chess without any specialized training?

The Setup

Before diving into the code, let me explain the setup.

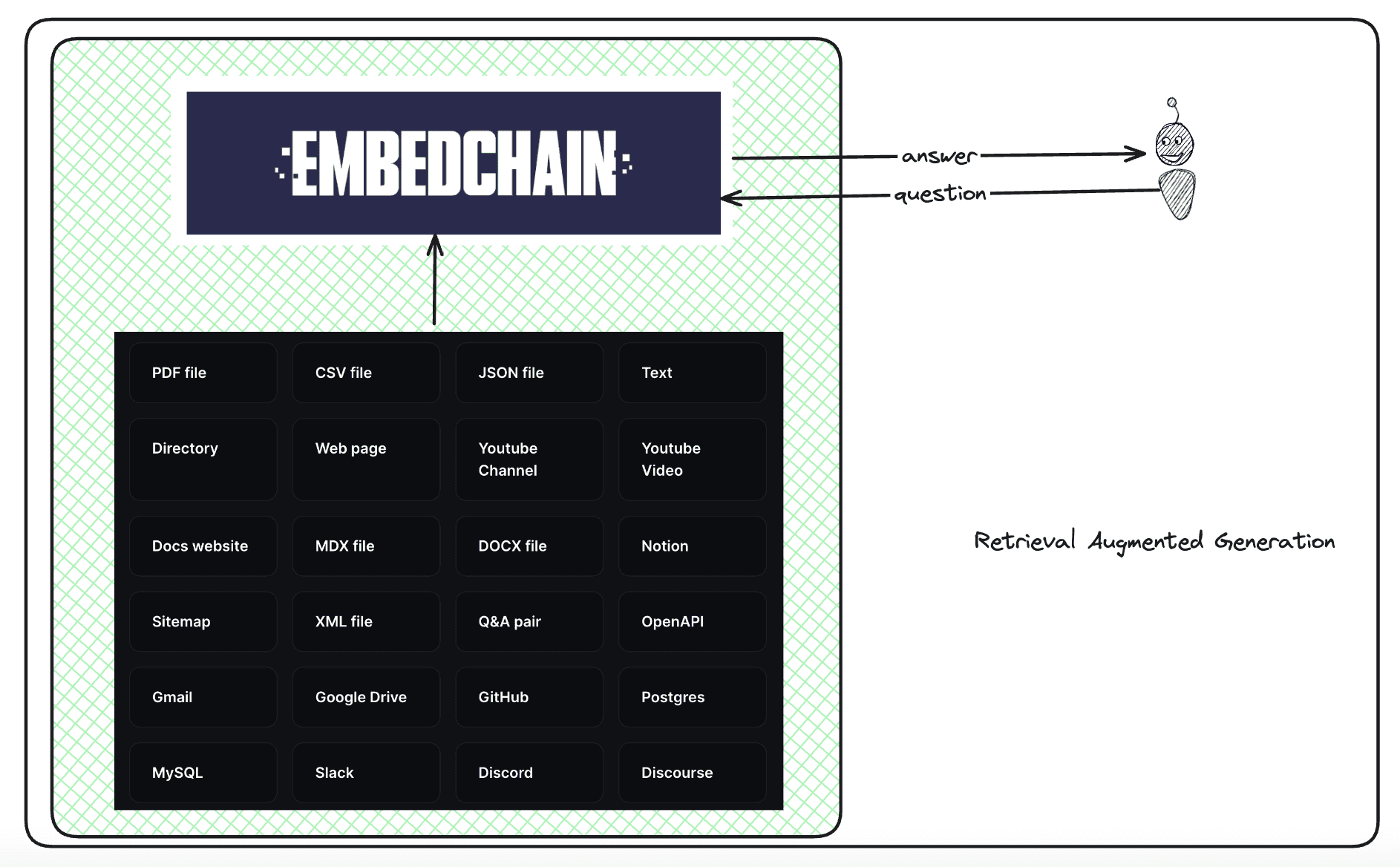

I used OpenRouter along with OpenAI’s API to access various LLMs. OpenRouter is a fantastic tool because it provides access to a diverse array of LLMs and allows them to be used via the OpenAI client.

LLM rankings on OpenRouter

In some of my tests, I also used the Stockfish chess engine. If you want to try it, you’ll need to download it first. It's straightforward to install—for example, you can use Homebrew:

brew install stockfishNaturally, the Stockfish engine beat all the LLMs it played against. Stockfish is a specialized chess engine, so it makes sense that it outperforms LLMs that are not specifically trained for chess.

For the remaining evaluations, I removed the Stockfish engine and organized games solely between LLMs.

Evaluating Performance

The code calculates and adjusts each player’s Elo score after every game, and it works beautifully. According to these relative Elo scores, the Claude-3.5-sonnet model seems to perform better than GPT-4o in chess.

Unfortunately, I did not run this evaluation across a large variety of models to build a full leaderboard—it’s quite time-consuming and a bit costly. If someone is willing to fund this, I’d be happy to conduct a more extensive competition.

I also noticed many draws between models, which tends to disrupt the Elo ratings slightly. I'm currently developing a new evaluation strategy that will consider performance throughout the game, not just the final result, to calculate Elo more accurately. If anyone has suggestions on this, please reach out on X or via email at franck@lycee.ai.

Code Structure

Here’s a quick overview of the code structure:

-

main.py: This is the main file to execute to start the tournament. It loads models frommodels.py, sets the number of games to play between each model, and initiates the tournament. -

models.py: Contains the list of models participating in the tournament. -

chess_model.py: Defines theChessModelclass, which is responsible for managing interactions with each chess-playing model, whether it's an LLM or the Stockfish engine. -

play_game.py: Contains the logic for running a single game between two models, including move validation and Elo rating adjustments. -

tournament.py: Orchestrates the overall tournament structure, schedules matchups, tracks results, and updates model ratings. -

requirements.txt: Lists all the dependencies required to run the tournament.

Contributing

That's it—nothing fancy or overly complex! If you find any issues, please let me know on GitHub. Don’t hesitate to contribute. The GitHub repository is under an MIT license, so let’s work together to build a comprehensive strategic planning and tactical intelligence leaderboard.

GitHub Repository

Here’s the link to the GitHub repository:

GitHub - fsndzomga/chess_tournament_openrouter

Contribute to fsndzomga/chess_tournament_openrouter development by creating an account on GitHub.

Happy coding!