RAG (Retrieval-Augmented Generation) demos are easy. RAG in production is much harder. I know that because I did a lot of RAG demos since last year, and I also shipped www.discute.co in production. So, I have experienced the two sides of the coin.

One thing that is hard to do in production is preventing malicious users from nudging the bot into responding to queries it is not supposed to answer. Let’s say you deploy a customer service chatbot. You want the chatbot to reply to queries about your product or services. You do not want it to reply to queries about your competitors’ products or about general questions about the 2024 US presidential election. Now, depending on the prompts you use to craft the final response that will be sent to the user, one part of the problem is solved usually. You can prompt your LLM to only respond based on the information provided in context. But that approach is not strong enough and can be easily hijacked by clever prompt injection techniques.

The consequences of deploying AI systems without robust safeguards can indeed be significant for companies, as illustrated by a recent incident involving Air Canada. The airline was held liable after its chatbot provided a passenger with incorrect information. This case underscores the critical importance of ensuring that AI-driven customer service tools, like chatbots, are equipped with accurate, up-to-date information and are capable of managing queries with a high degree of reliability.

For companies, this case acts as a cautionary tale about the legal and reputational risks associated with deploying AI technologies. It emphasizes the need for rigorous testing, ongoing monitoring, and rapid response mechanisms to correct inaccuracies or miscommunications provided by AI systems. Furthermore, it may lead to increased regulatory scrutiny on how companies deploy and manage AI-driven customer service technologies, ensuring they meet certain standards of accuracy and reliability.

To solve the issue more robustly, one can add a classifier specifically trained to recognize the kind of questions we want our chatbot to answer and discard those that are not suitable. The solution is thus to add that classifier upstream of the RAG system to filter out bad or dangerous user requests.

Classifier and standard RAG (only similar search)

But that’s easier said than done. You will need to have enough data to train a classifier. The data could be a set of questions you expect users to ask and potentially the kind of questions you want to avoid responding to at all costs. This requires careful collection and curation of data, as well as an understanding of the nuances of user queries and potential loopholes that could be exploited.

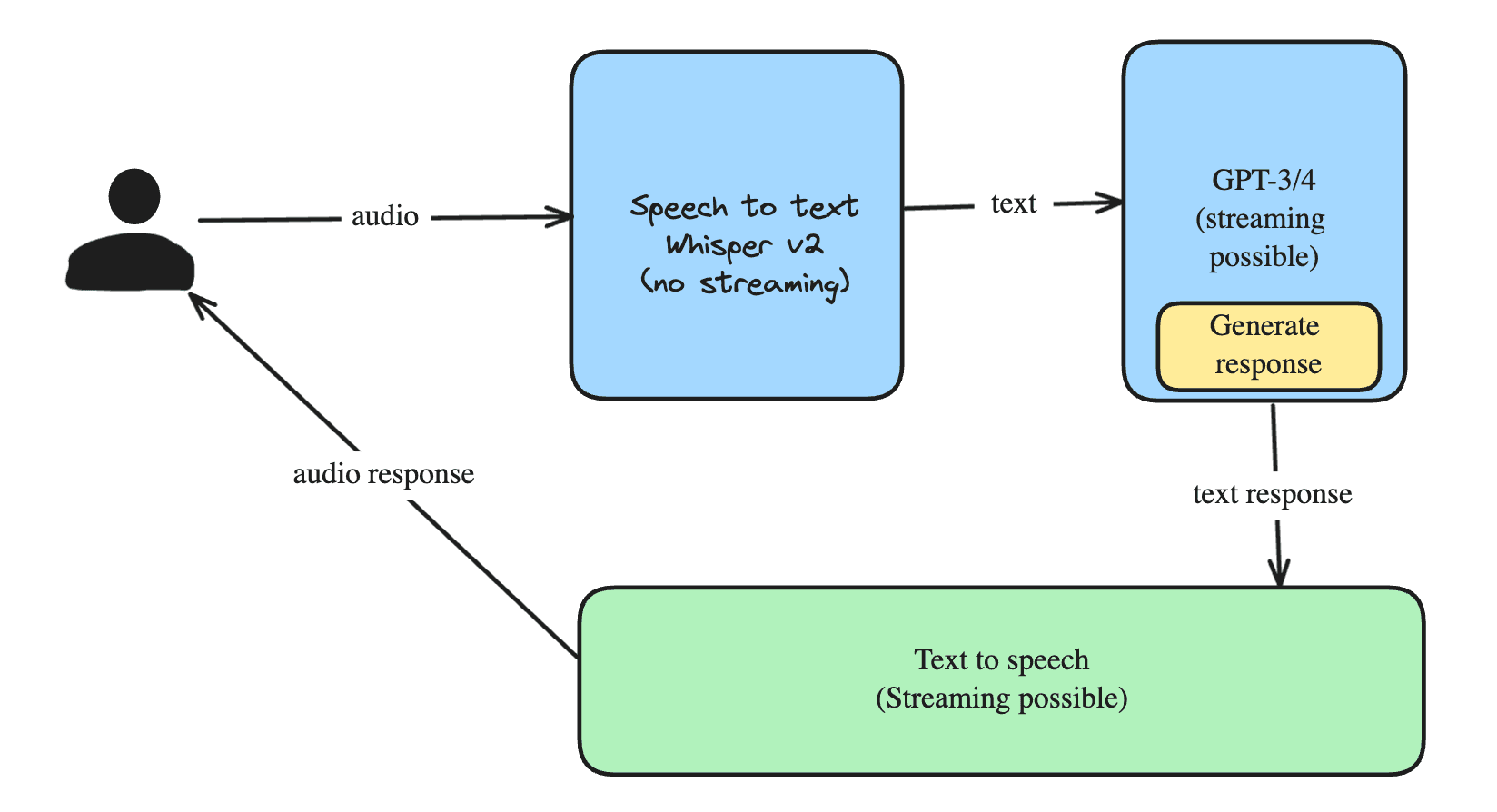

Here is a representation of what the system would look like with a standard RAG subsystem:

- Classifier: This is the first layer of defense against inappropriate or out-of-scope queries. It analyzes the incoming user questions and determines whether they fall within the predefined scope of acceptable queries. This classifier is trained on a dataset of expected questions and the types of questions to avoid, effectively acting as a gatekeeper.

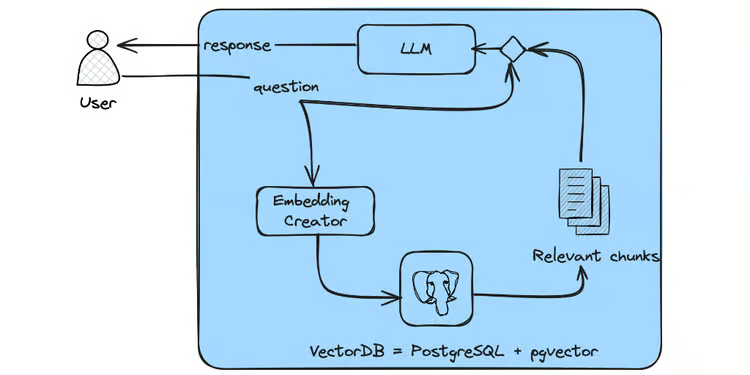

- Standard RAG Subsystem: For queries that pass through the classifier, the RAG subsystem then takes over. It uses a combination of retrieval and generation mechanisms to provide relevant and accurate responses based on the provided context and the corpus of knowledge it has access to. The standard RAG approach typically involves similarity search.

- Combining BM25 and Semantic Search: For better results, it can also be interesting to combine BM25 (a traditional information retrieval technique that scores documents based on the frequency of the query terms they contain) with semantic search capabilities. This hybrid approach leverages both the precision of keyword-based retrieval and the nuance of semantic understanding, providing more relevant and contextually appropriate responses.

Classifier and BM25 + semantic search

Implementing a RAG system in production, especially for customer-facing applications, requires a multi-layered approach to ensure both relevance and safety in responses. The combination of a robust classifier to filter queries, along with advanced retrieval and generation techniques, represents a comprehensive solution for deploying effective and secure AI-powered chatbots in real-world scenarios.

Learn how to do all that using DSPy:

- Start learning how to program language models using DSPy. Here's the perfect course for that.

- After mastering the basics, explore the advanced use cases of DSPy. Here is the ideal course for that.