Photo by Christian Wiediger on Unsplash

Google search, the goose that lays golden eggs for Alphabet, might be in danger. After disrupting so many aspects of our lives in the past two years, AI startups are now ready to take on search.

The precursor of this strategic play is Perplexity AI. Launched in August 2022, the platform aims to provide users with direct answers to their queries, accompanied by relevant sources and citations. Since its inception, Perplexity AI has experienced significant growth, reaching 15 million monthly users by the first quarter of 2024.

Now, even OpenAI has entered the AI search space with the launch of SearchGPT, an AI-powered search engine integrated with ChatGPT.

This is an important trend, one that you might need to understand to succeed in the generative AI space.

As Richard Feynman famously put it: “What I cannot create what I do not understand.” So what better way to understand AI search than by building a demo of Perplexity AI? We will call it Entropix.

Entropix is open source. You will have access to the code on GitHub. The goal of this article is thus to give you the overall picture of the architecture and the key aspects to consider. For implementation details, you will have to refer to the source code.

Anatomy of Entropix

Anatomy of Entropix

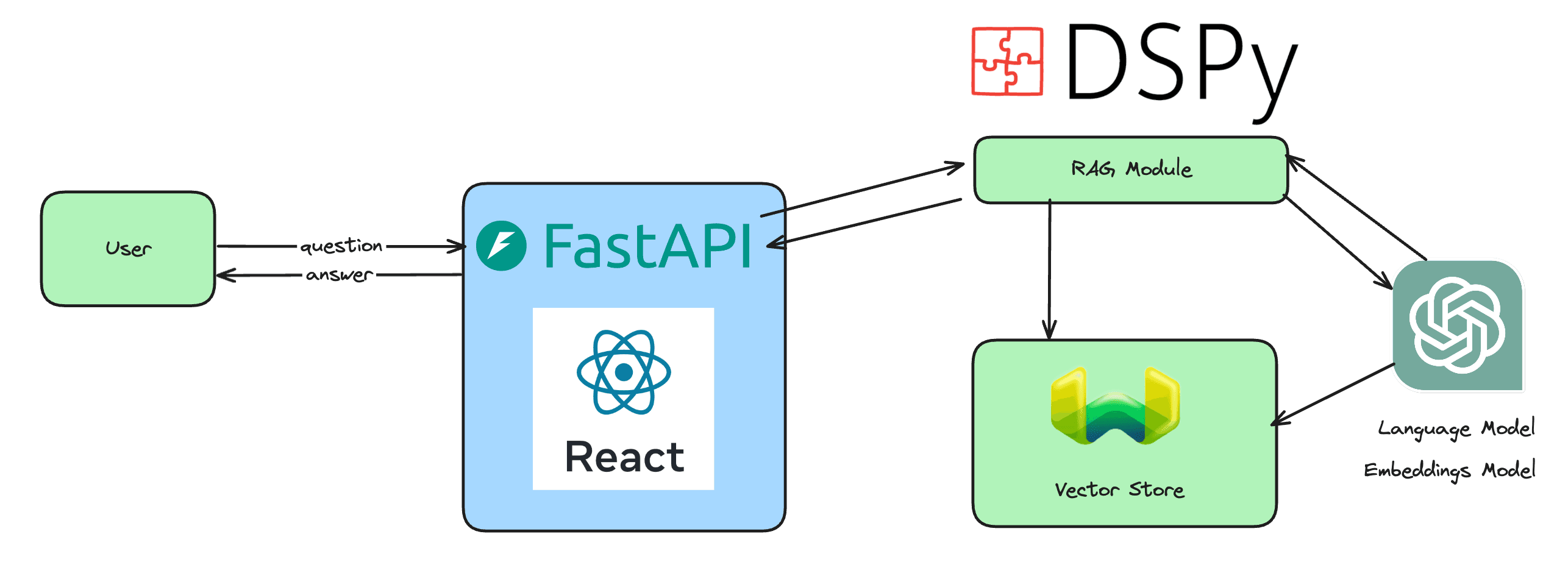

The three main components of Entropix are the LLM, the search API, and the UI interpreter.

When the user enters a search query, the LLM (Llama-3.1–405B from Nebius AI Studio in our case) processes that to generate a search request. The search request is a JSON generated using the guided JSON approach (read more here). It encapsulates two search queries to use in the search API.

In this demo, we are using Serpapi to get Google search results. More advanced use cases might require the creation of a custom search index.

from typing import Generator

from pydantic import BaseModel, Field

from openai import OpenAI

from dotenv import load_dotenv

import os

import json

from serpapi.google_search import GoogleSearch

from datetime import datetime

load_dotenv()

client = OpenAI(

base_url="https://api.studio.nebius.ai/v1/",

api_key=os.environ.get("NEBIUS_API_KEY"),

)

class SearchRequest(BaseModel):

"Parameters for search request on google search"

first_query: str = Field(..., title="first search query to be used on google search")

second_query: str = Field(..., title="second search query to be used on google search")

def search_query(user_input: str) -> dict:

messages = [

{

"role": "user",

"content": f"""Generate search request parameters for '{user_input}'

Add the today's date to the search query to get the most

recent results: {datetime.now().strftime('%Y-%m-%d')}

"""

}

]

completion = client.chat.completions.create(

model="meta-llama/Meta-Llama-3.1-405B-Instruct",

messages=messages,

extra_body={

'guided\_json': SearchRequest.model_json_schema()

}

)

return json.loads(completion.choices[0].message.content)The search requests generated by the LLM are used to get search results from Serpapi. These results are then firstly used to create the link overview at the top of the response, and secondly fed back to the LLM to generate a distinct answer.

Finally, the LLM consumes the data provided by Serpapi and crafts a response to the user query.

One surprising discovery when building Entropix was that adding the date of the request to ground the tool in time made it so much more useful. It was amazing to see how such a simple detail could have such a big impact.

Entropix UI

Entropix UI

Here is the link to Entropix’s source code. It is a relatively simple demo. Feel free to extend it and make it better!

Happy coding!